SDC RPC to Kafka (Deprecated)

The SDC RPC to Kafka origin reads data from one or more SDC RPC destinations and writes it immediately to Kafka. Use the SDC RPC to Kafka origin in an SDC RPC destination pipeline. However, the SDC RPC to Kafka origin is now deprecated and will be removed in a future release. We recommend using the SDC RPC origin.

Use the SDC RPC to Kafka origin when you have multiple SDC RPC origin pipelines with data that you want to write to Kafka without additional processing.

Like the SDC RPC origin, the SDC RPC to Kafka origin reads data from an SDC RPC destination in another pipeline. However, the SDC RPC to Kafka origin is optimized to write data from multiple pipelines directly to Kafka. When you use this origin, you cannot perform additional processing before writing to Kafka.

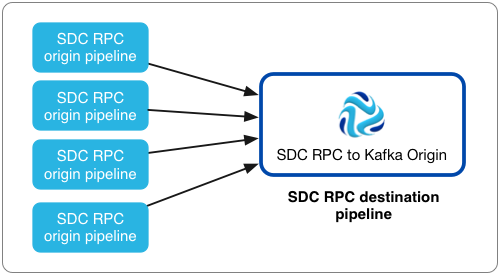

Here is an example of the recommended architecture for using the SDC RPC to Kafka origin:

When you configure the SDC RPC to Kafka origin, you define the port that the origin listens to for data, the SDC RPC ID, the maximum number of concurrent requests, and maximum batch request size. You can also configure SSL/TLS properties, including default transport protocols and cipher suites.

You also need to configure connection information for Kafka, including the broker URI, topic to write to, and maximum message size. You can add Kafka configuration properties and enable Kafka security as needed.

Pipeline Configuration

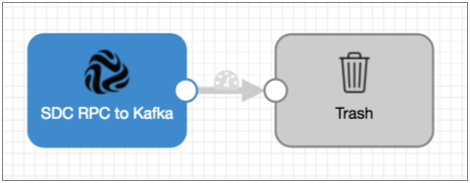

When you use an SDC RPC to Kafka origin in a pipeline, connect the origin to a Trash destination.

The SDC RPC to Kafka origin writes records directly to Kafka. The origin does not pass records to its output port, so you cannot perform additional processing or write the data to other destination systems.

However, since a pipeline requires a destination, you should connect the origin to the Trash destination to satisfy pipeline validation requirements.

A pipeline with the SDC RPC to Kafka origin should look like this:

Concurrent Requests

You can specify the maximum number of requests the SDC RPC to Kafka origin handles at one time.

An SDC RPC destination in an origin pipeline sends a request to the SDC RPC to Kafka origin when it wants to pass a batch of data to the origin. If you have one origin pipeline passing data to the SDC RPC to Kafka origin, you can set the maximum number of concurrent requests to 1 because the destination processes one batch of data at a time.

Typically, you would have more than one pipeline passing data to this origin. In this case, you should assess the number of origin pipelines, the expected output of the pipelines, and the resources of the Data Collector machine, and then tune the property as needed to improve pipeline performance.

For example, if you have 100 origin pipelines passing data to the SDC RPC to Kafka origin, but the pipelines produce data slowly, you can set the maximum to 20 to prevent these pipelines from using too much of the Data Collector resources during spikes in volume. Or, if the Data Collector has no resource issues and you want it to process data as quickly as possible, you can set the maximum to 90 or 100. Note that the SDC RPC destination also has advanced properties for retry and back off periods that can be used help tune performance.

Batch Request Size, Kafka Message Size, and Kafka Configuration

Configure the SDC RPC to Kafka maximum batch request size and Kafka message size properties in relationship to each other and to the maximum message size configured in Kafka.

The Max Batch Request Size (MB) property determines the maximum size of the batch of data that the origin accepts from each SDC RPC destination. Upon receiving a batch of data, the origin immediately writes the data to Kafka.

To promote peak performance, the origin writes as many records as possible into a single Kafka message. The Kafka Max Message Size (KB) property determines the maximum size of the message that it creates.

For example, say the origin uses the default 100 MB for the maximum batch request size and the default 900 KB for the maximum message size, and Kafka uses the 1 MB default for message.max.bytes.

When the origin requests a batch of data, it receives up to 100 MB of data at a time. When the origin writes to Kafka it groups records into as few messages as possible, including up to 900 KB of records in each message. Since the message size is less than the Kafka 1 MB requirement, the origin successfully writes all messages to Kafka.

If a record is larger than the 900 KB maximum message size, the origin generates an error and does not write the record - or the batch that includes the record - to Kafka. The SDC RPC destination that provided the batch with the oversized record processes the batch based on stage error record handling.

Additional Kafka Properties

You can add custom Kafka configuration properties to the SDC RPC to Kafka origin.

When you add a Kafka configuration property, enter the exact property name and the value. The stage does not validate the property names or values.

Several properties are defined by default, you can edit or remove the properties as necessary.

- key.serializer.class

- metadata.broker.list

- partitioner.class

- producer.type

- serializer.class

Enabling Kafka Security

You can configure the SDC RPC to Kafka origin to connect securely to Kafka through SSL/TLS, Kerberos, or both.

Enabling SSL/TLS

Perform the following steps to enable the SDC RPC to Kafka origin to use SSL/TLS to connect to Kafka.

- To use SSL/TLS to connect, first make sure Kafka is configured for SSL/TLS as described in the Kafka documentation.

- On the General tab of the stage, set the Stage Library property to the appropriate Apache Kafka version.

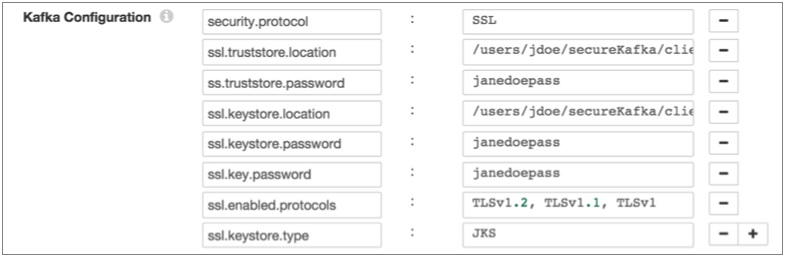

- On the Kafka tab, add the security.protocol Kafka configuration property and set it to SSL.

- Then add and configure the following SSL Kafka

properties:

- ssl.truststore.location

- ssl.truststore.password

When the Kafka broker requires client authentication - when the ssl.client.auth broker property is set to "required" - add and configure the following properties:- ssl.keystore.location

- ssl.keystore.password

- ssl.key.password

Some brokers might require adding the following properties as well:- ssl.enabled.protocols

- ssl.truststore.type

- ssl.keystore.type

For details about these properties, see the Kafka documentation.

For example, the following properties allow the stage to use SSL/TLS to connect to Kafka with client authentication:

Enabling Kerberos (SASL)

When you use Kerberos authentication, Data Collector uses the Kerberos principal and keytab to connect to Kafka. Perform the following steps to enable the SDC RPC to Kafka origin to use Kerberos to connect to Kafka.

- To use Kerberos, first make sure Kafka is configured for Kerberos as described in the Kafka documentation.

- Make sure that Kerberos authentication is enabled for Data Collector, as described in Kerberos Authentication.

- Add the Java Authentication and Authorization

Service (JAAS) configuration properties required for Kafka clients based on your

installation and authentication type:

- RPM, tarball, or Cloudera Manager installation without LDAP

authentication - If Data Collector does

not use LDAP authentication, create a separate JAAS configuration file

on the Data Collector

machine. Add the following

KafkaClientlogin section to the file:KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="<keytab path>" principal="<principal name>/<host name>@<realm>"; };For example:KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="/etc/security/keytabs/sdc.keytab" principal="sdc/sdc-01.streamsets.net@EXAMPLE.COM"; };Then modify the SDC_JAVA_OPTS environment variable to include the following option that defines the path to the JAAS configuration file:-Djava.security.auth.login.config=<JAAS config path>Modify environment variables using the method required by your installation type.

- RPM or tarball installation with LDAP

authentication - If LDAP authentication is enabled in an

RPM or tarball installation, add the properties to the JAAS

configuration file used by Data Collector - the

$SDC_CONF/ldap-login.conffile. Add the followingKafkaClientlogin section to the end of theldap-login.conffile:KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="<keytab path>" principal="<principal name>/<host name>@<realm>"; };For example:KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="/etc/security/keytabs/sdc.keytab" principal="sdc/sdc-01.streamsets.net@EXAMPLE.COM"; }; - Cloudera Manager installation with LDAP

authentication - If LDAP authentication is enabled in a

Cloudera Manager installation, enable the LDAP Config File Substitutions

(ldap.login.file.allow.substitutions) property for the StreamSets

service in Cloudera Manager.

If the Use Safety Valve to Edit LDAP Information (use.ldap.login.file) property is enabled and LDAP authentication is configured in the Data Collector Advanced Configuration Snippet (Safety Valve) for ldap-login.conf field, then add the JAAS configuration properties to the same ldap-login.conf safety valve.

If LDAP authentication is configured through the LDAP properties rather than the ldap-login.conf safety value, add the JAAS configuration properties to the Data Collector Advanced Configuration Snippet (Safety Valve) for generated-ldap-login-append.conf field.

Add the following

KafkaClientlogin section to the appropriate field as follows:KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="_KEYTAB_PATH" principal="<principal name>/_HOST@<realm>"; };For example:KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="_KEYTAB_PATH" principal="sdc/_HOST@EXAMPLE.COM"; };Cloudera Manager generates the appropriate keytab path and host name.

- RPM, tarball, or Cloudera Manager installation without LDAP

authentication - If Data Collector does

not use LDAP authentication, create a separate JAAS configuration file

on the Data Collector

machine. Add the following

- On the General tab of the stage, set the Stage Library property to the appropriate Apache Kafka version.

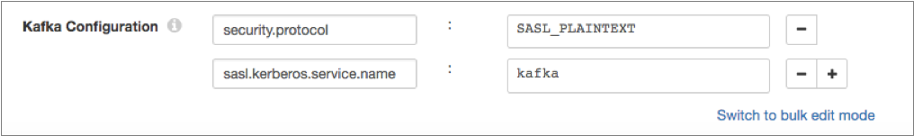

- On the Kafka tab, add the security.protocol Kafka configuration property, and set it to SASL_PLAINTEXT.

- Then, add the sasl.kerberos.service.name configuration property, and set it to kafka.

For example, the following Kafka properties enable connecting to Kafka with Kerberos:

Enabling SSL/TLS and Kerberos

You can enable the SDC RPC to Kafka origin to use SSL/TLS and Kerberos to connect to Kafka.

- Make sure Kafka is configured to use SSL/TLS and Kerberos (SASL) as described in the following Kafka documentation:

- Make sure that Kerberos authentication is enabled for Data Collector, as described in Kerberos Authentication.

- Add the Java Authentication and Authorization

Service (JAAS) configuration properties required for Kafka clients based on your

installation and authentication type:

- RPM, tarball, or Cloudera Manager installation without LDAP

authentication - If Data Collector does

not use LDAP authentication, create a separate JAAS configuration file

on the Data Collector

machine. Add the following

KafkaClientlogin section to the file:KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="<keytab path>" principal="<principal name>/<host name>@<realm>"; };For example:KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="/etc/security/keytabs/sdc.keytab" principal="sdc/sdc-01.streamsets.net@EXAMPLE.COM"; };Then modify the SDC_JAVA_OPTS environment variable to include the following option that defines the path to the JAAS configuration file:-Djava.security.auth.login.config=<JAAS config path>Modify environment variables using the method required by your installation type.

- RPM or tarball installation with LDAP

authentication - If LDAP authentication is enabled in an

RPM or tarball installation, add the properties to the JAAS

configuration file used by Data Collector - the

$SDC_CONF/ldap-login.conffile. Add the followingKafkaClientlogin section to the end of theldap-login.conffile:KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="<keytab path>" principal="<principal name>/<host name>@<realm>"; };For example:KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="/etc/security/keytabs/sdc.keytab" principal="sdc/sdc-01.streamsets.net@EXAMPLE.COM"; }; - Cloudera Manager installation with LDAP

authentication - If LDAP authentication is enabled in a

Cloudera Manager installation, enable the LDAP Config File Substitutions

(ldap.login.file.allow.substitutions) property for the StreamSets

service in Cloudera Manager.

If the Use Safety Valve to Edit LDAP Information (use.ldap.login.file) property is enabled and LDAP authentication is configured in the Data Collector Advanced Configuration Snippet (Safety Valve) for ldap-login.conf field, then add the JAAS configuration properties to the same ldap-login.conf safety valve.

If LDAP authentication is configured through the LDAP properties rather than the ldap-login.conf safety value, add the JAAS configuration properties to the Data Collector Advanced Configuration Snippet (Safety Valve) for generated-ldap-login-append.conf field.

Add the following

KafkaClientlogin section to the appropriate field as follows:KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="_KEYTAB_PATH" principal="<principal name>/_HOST@<realm>"; };For example:KafkaClient { com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true keyTab="_KEYTAB_PATH" principal="sdc/_HOST@EXAMPLE.COM"; };Cloudera Manager generates the appropriate keytab path and host name.

- RPM, tarball, or Cloudera Manager installation without LDAP

authentication - If Data Collector does

not use LDAP authentication, create a separate JAAS configuration file

on the Data Collector

machine. Add the following

- On the General tab of the stage, set the Stage Library property to the appropriate Apache Kafka version.

- On the Kafka tab, add the security.protocol property and set it to SASL_SSL.

- Then, add the sasl.kerberos.service.name configuration property, and set it to kafka.

- Then add and configure the following SSL Kafka

properties:

- ssl.truststore.location

- ssl.truststore.password

When the Kafka broker requires client authentication - when the ssl.client.auth broker property is set to "required" - add and configure the following properties:- ssl.keystore.location

- ssl.keystore.password

- ssl.key.password

Some brokers might require adding the following properties as well:- ssl.enabled.protocols

- ssl.truststore.type

- ssl.keystore.type

For details about these properties, see the Kafka documentation.

Configuring an SDC RPC to Kafka Origin

Configure an SDC RPC to Kafka origin to write data from multiple SDC RPC destinations directly to Kafka.